pyerrors

What is pyerrors?

pyerrors is a python package for error computation and propagation of Markov chain Monte Carlo data.

It is based on the gamma method arXiv:hep-lat/0306017. Some of its features are:

- automatic differentiation for exact linear error propagation as suggested in arXiv:1809.01289 (partly based on the autograd package).

- treatment of slow modes in the simulation as suggested in arXiv:1009.5228.

- coherent error propagation for data from different Markov chains.

- non-linear fits with x- and y-errors and exact linear error propagation based on automatic differentiation as introduced in arXiv:1809.01289.

- real and complex matrix operations and their error propagation based on automatic differentiation (Matrix inverse, Cholesky decomposition, calculation of eigenvalues and eigenvectors, singular value decomposition...).

More detailed examples can found in the GitHub repository

If you use pyerrors for research that leads to a publication please consider citing:

- Fabian Joswig, Simon Kuberski, Justus T. Kuhlmann, Jan Neuendorf, pyerrors: a python framework for error analysis of Monte Carlo data. Comput.Phys.Commun. 288 (2023) 108750.

- Ulli Wolff, Monte Carlo errors with less errors. Comput.Phys.Commun. 156 (2004) 143-153, Comput.Phys.Commun. 176 (2007) 383 (erratum).

- Alberto Ramos, Automatic differentiation for error analysis of Monte Carlo data. Comput.Phys.Commun. 238 (2019) 19-35.

and

- Stefan Schaefer, Rainer Sommer, Francesco Virotta, Critical slowing down and error analysis in lattice QCD simulations. Nucl.Phys.B 845 (2011) 93-119.

where applicable.

There exist similar publicly available implementations of gamma method error analysis suites in Fortran, Julia and Python.

Installation

Install the most recent release using pip and pypi:

python -m pip install pyerrors # Fresh install

python -m pip install -U pyerrors # Update

Install the most recent release using conda and conda-forge:

conda install -c conda-forge pyerrors # Fresh install

conda update -c conda-forge pyerrors # Update

Install the current develop version:

python -m pip install git+https://github.com/fjosw/pyerrors.git@develop

Basic example

import numpy as np

import pyerrors as pe

my_obs = pe.Obs([samples], ['ensemble_name']) # Initialize an Obs object

my_new_obs = 2 * np.log(my_obs) / my_obs ** 2 # Construct derived Obs object

my_new_obs.gamma_method() # Estimate the statistical error

print(my_new_obs) # Print the result to stdout

> 0.31498(72)

The Obs class

pyerrors introduces a new datatype, Obs, which simplifies error propagation and estimation for auto- and cross-correlated data.

An Obs object can be initialized with two arguments, the first is a list containing the samples for an observable from a Monte Carlo chain.

The samples can either be provided as python list or as numpy array.

The second argument is a list containing the names of the respective Monte Carlo chains as strings. These strings uniquely identify a Monte Carlo chain/ensemble. It is crucial for the correct error propagation that observations from the same Monte Carlo history are labeled with the same name. See Multiple ensembles/replica for details.

import pyerrors as pe

my_obs = pe.Obs([samples], ['ensemble_name'])

Error propagation

When performing mathematical operations on Obs objects the correct error propagation is intrinsically taken care of using a first order Taylor expansion

$$\delta_f^i=\sum_\alpha \bar{f}_\alpha \delta_\alpha^i\,,\quad \delta_\alpha^i=a_\alpha^i-\bar{a}_\alpha\,,$$

as introduced in arXiv:hep-lat/0306017.

The required derivatives $\bar{f}_\alpha$ are evaluated up to machine precision via automatic differentiation as suggested in arXiv:1809.01289.

The Obs class is designed such that mathematical numpy functions can be used on Obs just as for regular floats.

import numpy as np

import pyerrors as pe

my_obs1 = pe.Obs([samples1], ['ensemble_name'])

my_obs2 = pe.Obs([samples2], ['ensemble_name'])

my_sum = my_obs1 + my_obs2

my_m_eff = np.log(my_obs1 / my_obs2)

iamzero = my_m_eff - my_m_eff

# Check that value and fluctuations are zero within machine precision

print(iamzero == 0.0)

> True

Error estimation

The error estimation within pyerrors is based on the gamma method introduced in arXiv:hep-lat/0306017.

After having arrived at the derived quantity of interest the gamma_method can be called as detailed in the following example.

my_sum.gamma_method()

print(my_sum)

> 1.70(57)

my_sum.details()

> Result 1.70000000e+00 +/- 5.72046658e-01 +/- 7.56746598e-02 (33.650%)

> t_int 2.71422900e+00 +/- 6.40320983e-01 S = 2.00

> 1000 samples in 1 ensemble:

> · Ensemble 'ensemble_name' : 1000 configurations (from 1 to 1000)

The gamma_method is not automatically called after every intermediate step in order to prevent computational overhead.

We use the following definition of the integrated autocorrelation time established in Madras & Sokal 1988

$$\tau_\mathrm{int}=\frac{1}{2}+\sum_{t=1}^{W}\rho(t)\geq \frac{1}{2}\,.$$

The window $W$ is determined via the automatic windowing procedure described in arXiv:hep-lat/0306017.

The standard value for the parameter $S$ of this automatic windowing procedure is $S=2$. Other values for $S$ can be passed to the gamma_method as parameter.

my_sum.gamma_method(S=3.0)

my_sum.details()

> Result 1.70000000e+00 +/- 6.30675201e-01 +/- 1.04585650e-01 (37.099%)

> t_int 3.29909703e+00 +/- 9.77310102e-01 S = 3.00

> 1000 samples in 1 ensemble:

> · Ensemble 'ensemble_name' : 1000 configurations (from 1 to 1000)

The integrated autocorrelation time $\tau_\mathrm{int}$ and the autocorrelation function $\rho(W)$ can be monitored via the methods pyerrors.obs.Obs.plot_tauint and pyerrors.obs.Obs.plot_rho.

If the parameter $S$ is set to zero it is assumed that the dataset does not exhibit any autocorrelation and the window size is chosen to be zero. In this case the error estimate is identical to the sample standard error.

Exponential tails

Slow modes in the Monte Carlo history can be accounted for by attaching an exponential tail to the autocorrelation function $\rho$ as suggested in arXiv:1009.5228. The longest autocorrelation time in the history, $\tau_\mathrm{exp}$, can be passed to the gamma_method as parameter. In this case the automatic windowing procedure is vacated and the parameter $S$ does not affect the error estimate.

my_sum.gamma_method(tau_exp=7.2)

my_sum.details()

> Result 1.70000000e+00 +/- 6.28097762e-01 +/- 5.79077524e-02 (36.947%)

> t_int 3.27218667e+00 +/- 7.99583654e-01 tau_exp = 7.20, N_sigma = 1

> 1000 samples in 1 ensemble:

> · Ensemble 'ensemble_name' : 1000 configurations (from 1 to 1000)

For the full API see pyerrors.obs.Obs.gamma_method.

Multiple ensembles/replica

Error propagation for multiple ensembles (Markov chains with different simulation parameters) is handled automatically. Ensembles are uniquely identified by their name.

obs1 = pe.Obs([samples1], ['ensemble1'])

obs2 = pe.Obs([samples2], ['ensemble2'])

my_sum = obs1 + obs2

my_sum.details()

> Result 2.00697958e+00

> 1500 samples in 2 ensembles:

> · Ensemble 'ensemble1' : 1000 configurations (from 1 to 1000)

> · Ensemble 'ensemble2' : 500 configurations (from 1 to 500)

Observables from the same Monte Carlo chain have to be initialized with the same name for correct error propagation. If different names were used in this case the data would be treated as statistically independent resulting in loss of relevant information and a potential over or under estimate of the statistical error.

pyerrors identifies multiple replica (independent Markov chains with identical simulation parameters) by the vertical bar | in the name of the data set.

obs1 = pe.Obs([samples1], ['ensemble1|r01'])

obs2 = pe.Obs([samples2], ['ensemble1|r02'])

> my_sum = obs1 + obs2

> my_sum.details()

> Result 2.00697958e+00

> 1500 samples in 1 ensemble:

> · Ensemble 'ensemble1'

> · Replicum 'r01' : 1000 configurations (from 1 to 1000)

> · Replicum 'r02' : 500 configurations (from 1 to 500)

Error estimation for multiple ensembles

In order to keep track of different error analysis parameters for different ensembles one can make use of global dictionaries as detailed in the following example.

pe.Obs.S_dict['ensemble1'] = 2.5

pe.Obs.tau_exp_dict['ensemble2'] = 8.0

pe.Obs.tau_exp_dict['ensemble3'] = 2.0

In case the gamma_method is called without any parameters it will use the values specified in the dictionaries for the respective ensembles.

Passing arguments to the gamma_method still dominates over the dictionaries.

Irregular Monte Carlo chains

Obs objects defined on irregular Monte Carlo chains can be initialized with the parameter idl.

# Observable defined on configurations 20 to 519

obs1 = pe.Obs([samples1], ['ensemble1'], idl=[range(20, 520)])

obs1.details()

> Result 9.98319881e-01

> 500 samples in 1 ensemble:

> · Ensemble 'ensemble1' : 500 configurations (from 20 to 519)

# Observable defined on every second configuration between 5 and 1003

obs2 = pe.Obs([samples2], ['ensemble1'], idl=[range(5, 1005, 2)])

obs2.details()

> Result 9.99100712e-01

> 500 samples in 1 ensemble:

> · Ensemble 'ensemble1' : 500 configurations (from 5 to 1003 in steps of 2)

# Observable defined on configurations 2, 9, 28, 29 and 501

obs3 = pe.Obs([samples3], ['ensemble1'], idl=[[2, 9, 28, 29, 501]])

obs3.details()

> Result 1.01718064e+00

> 5 samples in 1 ensemble:

> · Ensemble 'ensemble1' : 5 configurations (irregular range)

Obs objects defined on regular and irregular histories of the same ensemble can be combined with each other and the correct error propagation and estimation is automatically taken care of.

Warning: Irregular Monte Carlo chains can result in odd patterns in the autocorrelation functions.

Make sure to check the autocorrelation time with e.g. pyerrors.obs.Obs.plot_rho or pyerrors.obs.Obs.plot_tauint.

For the full API see pyerrors.obs.Obs.

Correlators

When one is not interested in single observables but correlation functions, pyerrors offers the Corr class which simplifies the corresponding error propagation and provides the user with a set of standard methods. In order to initialize a Corr objects one needs to arrange the data as a list of Obs

my_corr = pe.Corr([obs_0, obs_1, obs_2, obs_3])

print(my_corr)

> x0/a Corr(x0/a)

> ------------------

> 0 0.7957(80)

> 1 0.5156(51)

> 2 0.3227(33)

> 3 0.2041(21)

In case the correlation functions are not defined on the outermost timeslices, for example because of fixed boundary conditions, a padding can be introduced.

my_corr = pe.Corr([obs_0, obs_1, obs_2, obs_3], padding=[1, 1])

print(my_corr)

> x0/a Corr(x0/a)

> ------------------

> 0

> 1 0.7957(80)

> 2 0.5156(51)

> 3 0.3227(33)

> 4 0.2041(21)

> 5

The individual entries of a correlator can be accessed via slicing

print(my_corr[3])

> 0.3227(33)

Error propagation with the Corr class works very similar to Obs objects. Mathematical operations are overloaded and Corr objects can be computed together with other Corr objects, Obs objects or real numbers and integers.

my_new_corr = 0.3 * my_corr[2] * my_corr * my_corr + 12 / my_corr

pyerrors provides the user with a set of regularly used methods for the manipulation of correlator objects:

Corr.gamma_methodapplies the gamma method to all entries of the correlator.Corr.m_effto construct effective masses. Various variants for periodic and fixed temporal boundary conditions are available.Corr.derivreturns the first derivative of the correlator asCorr. Different discretizations of the numerical derivative are available.Corr.second_derivreturns the second derivative of the correlator asCorr. Different discretizations of the numerical derivative are available.Corr.symmetricsymmetrizes parity even correlations functions, assuming periodic boundary conditions.Corr.anti_symmetricanti-symmetrizes parity odd correlations functions, assuming periodic boundary conditions.Corr.T_symmetryaverages a correlator with its time symmetry partner, assuming fixed boundary conditions.Corr.plateauextracts a plateau value from the correlator in a given range.Corr.rollperiodically shifts the correlator.Corr.reversereverses the time ordering of the correlator.Corr.correlateconstructs a disconnected correlation function from the correlator and anotherCorrorObsobject.Corr.reweightreweights the correlator.

pyerrors can also handle matrices of correlation functions and extract energy states from these matrices via a generalized eigenvalue problem (see pyerrors.correlators.Corr.GEVP).

For the full API see pyerrors.correlators.Corr.

Complex valued observables

pyerrors can handle complex valued observables via the class pyerrors.obs.CObs.

CObs are initialized with a real and an imaginary part which both can be Obs valued.

my_real_part = pe.Obs([samples1], ['ensemble1'])

my_imag_part = pe.Obs([samples2], ['ensemble1'])

my_cobs = pe.CObs(my_real_part, my_imag_part)

my_cobs.gamma_method()

print(my_cobs)

> (0.9959(91)+0.659(28)j)

Elementary mathematical operations are overloaded and samples are properly propagated as for the Obs class.

my_derived_cobs = (my_cobs + my_cobs.conjugate()) / np.abs(my_cobs)

my_derived_cobs.gamma_method()

print(my_derived_cobs)

> (1.668(23)+0.0j)

The Covobs class

In many projects, auxiliary data that is not based on Monte Carlo chains enters. Examples are experimentally determined mesons masses which are used to set the scale or renormalization constants. These numbers come with an error that has to be propagated through the analysis. The Covobs class allows to define such quantities in pyerrors. Furthermore, external input might consist of correlated quantities. An example are the parameters of an interpolation formula, which are defined via mean values and a covariance matrix between all parameters. The contribution of the interpolation formula to the error of a derived quantity therefore might depend on the complete covariance matrix.

This concept is built into the definition of Covobs. In pyerrors, external input is defined by $M$ mean values, a $M\times M$ covariance matrix, where $M=1$ is permissible, and a name that uniquely identifies the covariance matrix. Below, we define the pion mass, based on its mean value and error, 134.9768(5). Note, that the square of the error enters cov_Obs, since the second argument of this function is the covariance matrix of the Covobs.

import pyerrors.obs as pe

mpi = pe.cov_Obs(134.9768, 0.0005**2, 'pi^0 mass')

mpi.gamma_method()

mpi.details()

> Result 1.34976800e+02 +/- 5.00000000e-04 +/- 0.00000000e+00 (0.000%)

> pi^0 mass 5.00000000e-04

> 0 samples in 1 ensemble:

> · Covobs 'pi^0 mass'

The resulting object mpi is an Obs that contains a Covobs. In the following, it may be handled as any other Obs. The contribution of the covariance matrix to the error of an Obs is determined from the $M \times M$ covariance matrix $\Sigma$ and the gradient of the Obs with respect to the external quantities, which is the $1\times M$ Jacobian matrix $J$, via

$$s = \sqrt{J^T \Sigma J}\,,$$

where the Jacobian is computed for each derived quantity via automatic differentiation.

Correlated auxiliary data is defined similarly to above, e.g., via

RAP = pe.cov_Obs([16.7457, -19.0475], [[3.49591, -6.07560], [-6.07560, 10.5834]], 'R_AP, 1906.03445, (5.3a)')

print(RAP)

> [Obs[16.7(1.9)], Obs[-19.0(3.3)]]

where RAP now is a list of two Obs that contains the two correlated parameters.

Since the gradient of a derived observable with respect to an external covariance matrix is propagated through the entire analysis, the Covobs class allows to quote the derivative of a result with respect to the external quantities. If these derivatives are published together with the result, small shifts in the definition of external quantities, e.g., the definition of the physical point, can be performed a posteriori based on the published information. This may help to compare results of different groups. The gradient of an Obs o with respect to a covariance matrix with the identifying string k may be accessed via

o.covobs[k].grad

Error propagation in iterative algorithms

pyerrors supports exact linear error propagation for iterative algorithms like various variants of non-linear least squares fits or root finding. The derivatives required for the error propagation are calculated as described in arXiv:1809.01289.

Least squares fits

Standard non-linear least square fits with errors on the dependent but not the independent variables can be performed with pyerrors.fits.least_squares. As default solver the Levenberg-Marquardt algorithm implemented in scipy is used.

Fit functions have to be of the following form

import autograd.numpy as anp

def func(a, x):

return a[1] * anp.exp(-a[0] * x)

It is important that numerical functions refer to autograd.numpy instead of numpy for the automatic differentiation in iterative algorithms to work properly.

Fits can then be performed via

fit_result = pe.fits.least_squares(x, y, func)

print("\n", fit_result)

> Fit with 2 parameters

> Method: Levenberg-Marquardt

> `ftol` termination condition is satisfied.

> chisquare/d.o.f.: 0.9593035785160936

> Goodness of fit:

> χ²/d.o.f. = 0.959304

> p-value = 0.5673

> Fit parameters:

> 0 0.0548(28)

> 1 1.933(64)

where x is a list or numpy.array of floats and y is a list or numpy.array of Obs.

Data stored in Corr objects can be fitted directly using the Corr.fit method.

my_corr = pe.Corr(y)

fit_result = my_corr.fit(func, fitrange=[12, 25])

this can simplify working with absolute fit ranges and takes care of gaps in the data automatically.

For fit functions with multiple independent variables the fit function can be of the form

def func(a, x):

(x1, x2) = x

return a[0] * x1 ** 2 + a[1] * x2

pyerrors also supports correlated fits which can be triggered via the parameter correlated_fit=True.

Details about how the required covariance matrix is estimated can be found in pyerrors.obs.covariance.

Direct visualizations of the performed fits can be triggered via resplot=True or qqplot=True.

For all available options including combined fits to multiple datasets see pyerrors.fits.least_squares.

Total least squares fits

pyerrors can also fit data with errors on both the dependent and independent variables using the total least squares method also referred to as orthogonal distance regression as implemented in scipy, see pyerrors.fits.least_squares. The syntax is identical to the standard least squares case, the only difference being that x also has to be a list or numpy.array of Obs.

For the full API see pyerrors.fits for fits and pyerrors.roots for finding roots of functions.

Matrix operations

pyerrors provides wrappers for Obs- and CObs-valued matrix operations based on numpy.linalg. The supported functions include:

invfor the matrix inverse.cholsekyfor the Cholesky decomposition.detfor the matrix determinant.eighfor eigenvalues and eigenvectors of hermitean matrices.eigfor eigenvalues of general matrices.pinvfor the Moore-Penrose pseudoinverse.svdfor the singular-value-decomposition.

For the full API see pyerrors.linalg.

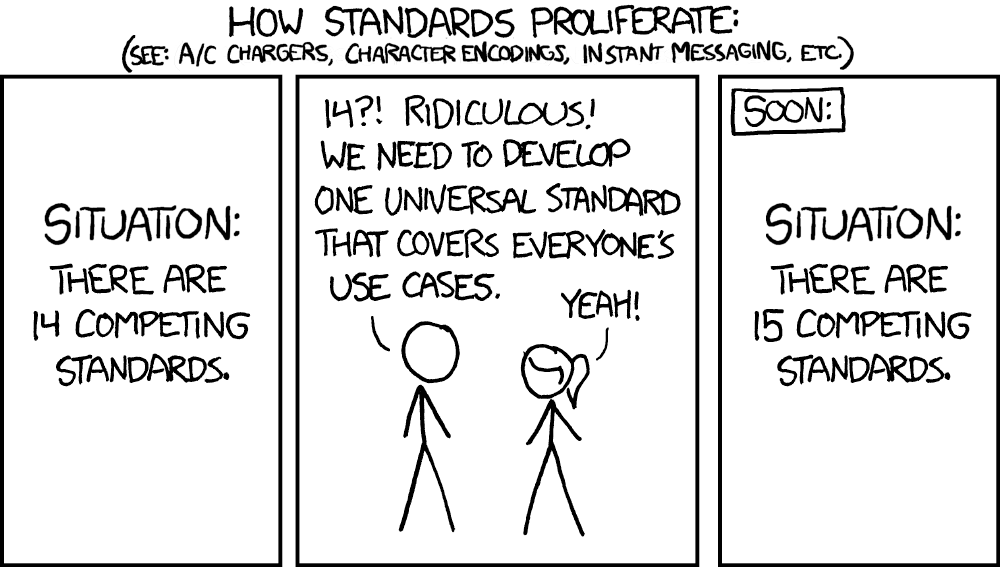

Export data

The preferred exported file format within pyerrors is json.gz. Files written to this format are valid JSON files that have been compressed using gzip. The structure of the content is inspired by the dobs format of the ALPHA collaboration. The aim of the format is to facilitate the storage of data in a self-contained way such that, even years after the creation of the file, it is possible to extract all necessary information:

- What observables are stored? Possibly: How exactly are they defined.

- How does each single ensemble or external quantity contribute to the error of the observable?

- Who did write the file when and on which machine?

This can be achieved by storing all information in one single file. The export routines of pyerrors are written such that as much information as possible is written automatically as described in the following example

my_obs = pe.Obs([samples], ["test_ensemble"])

my_obs.tag = "My observable"

pe.input.json.dump_to_json(my_obs, "test_output_file", description="This file contains a test observable")

# For a single observable one can equivalently use the class method dump

my_obs.dump("test_output_file", description="This file contains a test observable")

check = pe.input.json.load_json("test_output_file")

print(my_obs == check)

> True

The format also allows to directly write out the content of Corr objects or lists and arrays of Obs objects by passing the desired data to pyerrors.input.json.dump_to_json.

json.gz format specification

The first entries of the file provide optional auxiliary information:

programis a string that indicates which program was used to write the file.versionis a string that specifies the version of the format.whois a string that specifies the user name of the creator of the file.dateis a string and contains the creation date of the file.hostis a string and contains the hostname of the machine where the file has been written.descriptioncontains information on the content of the file. This field is not filled automatically inpyerrors. The user is advised to provide as detailed information as possible in this field. Examples are: Input files of measurements or simulations, LaTeX formulae or references to publications to specify how the observables have been computed, details on the analysis strategy, ... This field may be any valid JSON type. Strings, arrays or objects (equivalent to dicts in python) are well suited to provide information.

The only necessary entry of the file is the field

-obsdata, an array that contains the actual data.

Each entry of the array belongs to a single structure of observables. Currently, these structures can be either of Obs, list, numpy.ndarray, Corr. All Obs inside a structure (with dimension > 0) have to be defined on the same set of configurations. Different structures, that are represented by entries of the array obsdata, are treated independently. Each entry of the array obsdata has the following required entries:

typeis a string that specifies the type of the structure. This allows to parse the content to the correct form after reading the file. It is always possible to interpret the content as list of Obs.valueis an array that contains the mean values of the Obs inside the structure. The following entries are optional:layoutis a string that specifies the layout of multi-dimensional structures. Examples are "2, 2" for a 2x2 dimensional matrix or "64, 4, 4" for a Corr with $T=64$ and 4x4 matrices on each time slices. "1" denotes a single Obs. Multi-dimensional structures are stored in row-major format (see below).tagis any JSON type. It contains additional information concerning the structure. Thetagof anObsinpyerrorsis written here.reweightedis a Bool that may be used to specify, whether theObsin the structure have been reweighted.datais an array that contains the data from MC chains. We will define it below.cdatais an array that contains the data from external quantities with an error (Covobsinpyerrors). We will define it below.

The array data contains the data from MC chains. Each entry of the array corresponds to one ensemble and contains:

id, a string that contains the name of the ensemblereplica, an array that contains an entry per replica of the ensemble.

Each entry of replica contains

name, a string that contains the name of the replica

deltas, an array that contains the actual data.

Each entry in deltas corresponds to one configuration of the replica and has $1+N$ many entries. The first entry is an integer that specifies the configuration number that, together with ensemble and replica name, may be used to uniquely identify the configuration on which the data has been obtained. The following N entries specify the deltas, i.e., the deviation of the observable from the mean value on this configuration, of each Obs inside the structure. Multi-dimensional structures are stored in a row-major format. For primary observables, such as correlation functions, $value + delta_i$ matches the primary data obtained on the configuration.

The array cdata contains information about the contribution of auxiliary observables, represented by Covobs in pyerrors, to the total error of the observables. Each entry of the array belongs to one auxiliary covariance matrix and contains:

id, a string that identifies the covariance matrixlayout, a string that defines the dimensions of the $M\times M$ covariance matrix (has to be "M, M" or "1").cov, an array that contains the $M\times M$ many entries of the covariance matrix, stored in row-major format.grad, an array that contains N entries, one for eachObsinside the structure. Each entry itself is an array, that contains the M gradients of the Nth observable with respect to the quantity that corresponds to the Mth diagonal entry of the covariance matrix.

A JSON schema that may be used to verify the correctness of a file with respect to the format definition is stored in ./examples/json_schema.json. The schema is a self-descriptive format definition and contains an exemplary file.

Julia I/O routines for the json.gz format, compatible with ADerrors.jl, can be found here.

1r''' 2# What is pyerrors? 3`pyerrors` is a python package for error computation and propagation of Markov chain Monte Carlo data. 4It is based on the gamma method [arXiv:hep-lat/0306017](https://arxiv.org/abs/hep-lat/0306017). Some of its features are: 5- automatic differentiation for exact linear error propagation as suggested in [arXiv:1809.01289](https://arxiv.org/abs/1809.01289) (partly based on the [autograd](https://github.com/HIPS/autograd) package). 6- treatment of slow modes in the simulation as suggested in [arXiv:1009.5228](https://arxiv.org/abs/1009.5228). 7- coherent error propagation for data from different Markov chains. 8- non-linear fits with x- and y-errors and exact linear error propagation based on automatic differentiation as introduced in [arXiv:1809.01289](https://arxiv.org/abs/1809.01289). 9- real and complex matrix operations and their error propagation based on automatic differentiation (Matrix inverse, Cholesky decomposition, calculation of eigenvalues and eigenvectors, singular value decomposition...). 10 11More detailed examples can found in the [GitHub repository](https://github.com/fjosw/pyerrors/tree/develop/examples) [](https://mybinder.org/v2/gh/fjosw/pyerrors/HEAD?labpath=examples). 12 13If you use `pyerrors` for research that leads to a publication please consider citing: 14- Fabian Joswig, Simon Kuberski, Justus T. Kuhlmann, Jan Neuendorf, *pyerrors: a python framework for error analysis of Monte Carlo data*. Comput.Phys.Commun. 288 (2023) 108750. 15- Ulli Wolff, *Monte Carlo errors with less errors*. Comput.Phys.Commun. 156 (2004) 143-153, Comput.Phys.Commun. 176 (2007) 383 (erratum). 16- Alberto Ramos, *Automatic differentiation for error analysis of Monte Carlo data*. Comput.Phys.Commun. 238 (2019) 19-35. 17 18and 19 20- Stefan Schaefer, Rainer Sommer, Francesco Virotta, *Critical slowing down and error analysis in lattice QCD simulations*. Nucl.Phys.B 845 (2011) 93-119. 21 22where applicable. 23 24There exist similar publicly available implementations of gamma method error analysis suites in [Fortran](https://gitlab.ift.uam-csic.es/alberto/aderrors), [Julia](https://gitlab.ift.uam-csic.es/alberto/aderrors.jl) and [Python](https://github.com/mbruno46/pyobs). 25 26## Installation 27 28Install the most recent release using pip and [pypi](https://pypi.org/project/pyerrors/): 29```bash 30python -m pip install pyerrors # Fresh install 31python -m pip install -U pyerrors # Update 32``` 33Install the most recent release using conda and [conda-forge](https://anaconda.org/conda-forge/pyerrors): 34```bash 35conda install -c conda-forge pyerrors # Fresh install 36conda update -c conda-forge pyerrors # Update 37``` 38Install the current `develop` version: 39```bash 40python -m pip install git+https://github.com/fjosw/pyerrors.git@develop 41``` 42 43## Basic example 44 45```python 46import numpy as np 47import pyerrors as pe 48 49my_obs = pe.Obs([samples], ['ensemble_name']) # Initialize an Obs object 50my_new_obs = 2 * np.log(my_obs) / my_obs ** 2 # Construct derived Obs object 51my_new_obs.gamma_method() # Estimate the statistical error 52print(my_new_obs) # Print the result to stdout 53> 0.31498(72) 54``` 55 56# The `Obs` class 57 58`pyerrors` introduces a new datatype, `Obs`, which simplifies error propagation and estimation for auto- and cross-correlated data. 59An `Obs` object can be initialized with two arguments, the first is a list containing the samples for an observable from a Monte Carlo chain. 60The samples can either be provided as python list or as numpy array. 61The second argument is a list containing the names of the respective Monte Carlo chains as strings. These strings uniquely identify a Monte Carlo chain/ensemble. **It is crucial for the correct error propagation that observations from the same Monte Carlo history are labeled with the same name. See [Multiple ensembles/replica](#multiple-ensemblesreplica) for details.** 62 63```python 64import pyerrors as pe 65 66my_obs = pe.Obs([samples], ['ensemble_name']) 67``` 68 69## Error propagation 70 71When performing mathematical operations on `Obs` objects the correct error propagation is intrinsically taken care of using a first order Taylor expansion 72$$\delta_f^i=\sum_\alpha \bar{f}_\alpha \delta_\alpha^i\,,\quad \delta_\alpha^i=a_\alpha^i-\bar{a}_\alpha\,,$$ 73as introduced in [arXiv:hep-lat/0306017](https://arxiv.org/abs/hep-lat/0306017). 74The required derivatives $\bar{f}_\alpha$ are evaluated up to machine precision via automatic differentiation as suggested in [arXiv:1809.01289](https://arxiv.org/abs/1809.01289). 75 76The `Obs` class is designed such that mathematical numpy functions can be used on `Obs` just as for regular floats. 77 78```python 79import numpy as np 80import pyerrors as pe 81 82my_obs1 = pe.Obs([samples1], ['ensemble_name']) 83my_obs2 = pe.Obs([samples2], ['ensemble_name']) 84 85my_sum = my_obs1 + my_obs2 86 87my_m_eff = np.log(my_obs1 / my_obs2) 88 89iamzero = my_m_eff - my_m_eff 90# Check that value and fluctuations are zero within machine precision 91print(iamzero == 0.0) 92> True 93``` 94 95## Error estimation 96 97The error estimation within `pyerrors` is based on the gamma method introduced in [arXiv:hep-lat/0306017](https://arxiv.org/abs/hep-lat/0306017). 98After having arrived at the derived quantity of interest the `gamma_method` can be called as detailed in the following example. 99 100```python 101my_sum.gamma_method() 102print(my_sum) 103> 1.70(57) 104my_sum.details() 105> Result 1.70000000e+00 +/- 5.72046658e-01 +/- 7.56746598e-02 (33.650%) 106> t_int 2.71422900e+00 +/- 6.40320983e-01 S = 2.00 107> 1000 samples in 1 ensemble: 108> · Ensemble 'ensemble_name' : 1000 configurations (from 1 to 1000) 109 110``` 111The `gamma_method` is not automatically called after every intermediate step in order to prevent computational overhead. 112 113We use the following definition of the integrated autocorrelation time established in [Madras & Sokal 1988](https://link.springer.com/article/10.1007/BF01022990) 114$$\tau_\mathrm{int}=\frac{1}{2}+\sum_{t=1}^{W}\rho(t)\geq \frac{1}{2}\,.$$ 115The window $W$ is determined via the automatic windowing procedure described in [arXiv:hep-lat/0306017](https://arxiv.org/abs/hep-lat/0306017). 116The standard value for the parameter $S$ of this automatic windowing procedure is $S=2$. Other values for $S$ can be passed to the `gamma_method` as parameter. 117 118```python 119my_sum.gamma_method(S=3.0) 120my_sum.details() 121> Result 1.70000000e+00 +/- 6.30675201e-01 +/- 1.04585650e-01 (37.099%) 122> t_int 3.29909703e+00 +/- 9.77310102e-01 S = 3.00 123> 1000 samples in 1 ensemble: 124> · Ensemble 'ensemble_name' : 1000 configurations (from 1 to 1000) 125 126``` 127 128The integrated autocorrelation time $\tau_\mathrm{int}$ and the autocorrelation function $\rho(W)$ can be monitored via the methods `pyerrors.obs.Obs.plot_tauint` and `pyerrors.obs.Obs.plot_rho`. 129 130If the parameter $S$ is set to zero it is assumed that the dataset does not exhibit any autocorrelation and the window size is chosen to be zero. 131In this case the error estimate is identical to the sample standard error. 132 133### Exponential tails 134 135Slow modes in the Monte Carlo history can be accounted for by attaching an exponential tail to the autocorrelation function $\rho$ as suggested in [arXiv:1009.5228](https://arxiv.org/abs/1009.5228). The longest autocorrelation time in the history, $\tau_\mathrm{exp}$, can be passed to the `gamma_method` as parameter. In this case the automatic windowing procedure is vacated and the parameter $S$ does not affect the error estimate. 136 137```python 138my_sum.gamma_method(tau_exp=7.2) 139my_sum.details() 140> Result 1.70000000e+00 +/- 6.28097762e-01 +/- 5.79077524e-02 (36.947%) 141> t_int 3.27218667e+00 +/- 7.99583654e-01 tau_exp = 7.20, N_sigma = 1 142> 1000 samples in 1 ensemble: 143> · Ensemble 'ensemble_name' : 1000 configurations (from 1 to 1000) 144``` 145 146For the full API see `pyerrors.obs.Obs.gamma_method`. 147 148## Multiple ensembles/replica 149 150Error propagation for multiple ensembles (Markov chains with different simulation parameters) is handled automatically. Ensembles are uniquely identified by their `name`. 151 152```python 153obs1 = pe.Obs([samples1], ['ensemble1']) 154obs2 = pe.Obs([samples2], ['ensemble2']) 155 156my_sum = obs1 + obs2 157my_sum.details() 158> Result 2.00697958e+00 159> 1500 samples in 2 ensembles: 160> · Ensemble 'ensemble1' : 1000 configurations (from 1 to 1000) 161> · Ensemble 'ensemble2' : 500 configurations (from 1 to 500) 162``` 163Observables from the **same Monte Carlo chain** have to be initialized with the **same name** for correct error propagation. If different names were used in this case the data would be treated as statistically independent resulting in loss of relevant information and a potential over or under estimate of the statistical error. 164 165 166`pyerrors` identifies multiple replica (independent Markov chains with identical simulation parameters) by the vertical bar `|` in the name of the data set. 167 168```python 169obs1 = pe.Obs([samples1], ['ensemble1|r01']) 170obs2 = pe.Obs([samples2], ['ensemble1|r02']) 171 172> my_sum = obs1 + obs2 173> my_sum.details() 174> Result 2.00697958e+00 175> 1500 samples in 1 ensemble: 176> · Ensemble 'ensemble1' 177> · Replicum 'r01' : 1000 configurations (from 1 to 1000) 178> · Replicum 'r02' : 500 configurations (from 1 to 500) 179``` 180 181### Error estimation for multiple ensembles 182 183In order to keep track of different error analysis parameters for different ensembles one can make use of global dictionaries as detailed in the following example. 184 185```python 186pe.Obs.S_dict['ensemble1'] = 2.5 187pe.Obs.tau_exp_dict['ensemble2'] = 8.0 188pe.Obs.tau_exp_dict['ensemble3'] = 2.0 189``` 190 191In case the `gamma_method` is called without any parameters it will use the values specified in the dictionaries for the respective ensembles. 192Passing arguments to the `gamma_method` still dominates over the dictionaries. 193 194 195## Irregular Monte Carlo chains 196 197`Obs` objects defined on irregular Monte Carlo chains can be initialized with the parameter `idl`. 198 199```python 200# Observable defined on configurations 20 to 519 201obs1 = pe.Obs([samples1], ['ensemble1'], idl=[range(20, 520)]) 202obs1.details() 203> Result 9.98319881e-01 204> 500 samples in 1 ensemble: 205> · Ensemble 'ensemble1' : 500 configurations (from 20 to 519) 206 207# Observable defined on every second configuration between 5 and 1003 208obs2 = pe.Obs([samples2], ['ensemble1'], idl=[range(5, 1005, 2)]) 209obs2.details() 210> Result 9.99100712e-01 211> 500 samples in 1 ensemble: 212> · Ensemble 'ensemble1' : 500 configurations (from 5 to 1003 in steps of 2) 213 214# Observable defined on configurations 2, 9, 28, 29 and 501 215obs3 = pe.Obs([samples3], ['ensemble1'], idl=[[2, 9, 28, 29, 501]]) 216obs3.details() 217> Result 1.01718064e+00 218> 5 samples in 1 ensemble: 219> · Ensemble 'ensemble1' : 5 configurations (irregular range) 220 221``` 222 223`Obs` objects defined on regular and irregular histories of the same ensemble can be combined with each other and the correct error propagation and estimation is automatically taken care of. 224 225**Warning:** Irregular Monte Carlo chains can result in odd patterns in the autocorrelation functions. 226Make sure to check the autocorrelation time with e.g. `pyerrors.obs.Obs.plot_rho` or `pyerrors.obs.Obs.plot_tauint`. 227 228For the full API see `pyerrors.obs.Obs`. 229 230# Correlators 231When one is not interested in single observables but correlation functions, `pyerrors` offers the `Corr` class which simplifies the corresponding error propagation and provides the user with a set of standard methods. In order to initialize a `Corr` objects one needs to arrange the data as a list of `Obs` 232```python 233my_corr = pe.Corr([obs_0, obs_1, obs_2, obs_3]) 234print(my_corr) 235> x0/a Corr(x0/a) 236> ------------------ 237> 0 0.7957(80) 238> 1 0.5156(51) 239> 2 0.3227(33) 240> 3 0.2041(21) 241``` 242In case the correlation functions are not defined on the outermost timeslices, for example because of fixed boundary conditions, a padding can be introduced. 243```python 244my_corr = pe.Corr([obs_0, obs_1, obs_2, obs_3], padding=[1, 1]) 245print(my_corr) 246> x0/a Corr(x0/a) 247> ------------------ 248> 0 249> 1 0.7957(80) 250> 2 0.5156(51) 251> 3 0.3227(33) 252> 4 0.2041(21) 253> 5 254``` 255The individual entries of a correlator can be accessed via slicing 256```python 257print(my_corr[3]) 258> 0.3227(33) 259``` 260Error propagation with the `Corr` class works very similar to `Obs` objects. Mathematical operations are overloaded and `Corr` objects can be computed together with other `Corr` objects, `Obs` objects or real numbers and integers. 261```python 262my_new_corr = 0.3 * my_corr[2] * my_corr * my_corr + 12 / my_corr 263``` 264 265`pyerrors` provides the user with a set of regularly used methods for the manipulation of correlator objects: 266- `Corr.gamma_method` applies the gamma method to all entries of the correlator. 267- `Corr.m_eff` to construct effective masses. Various variants for periodic and fixed temporal boundary conditions are available. 268- `Corr.deriv` returns the first derivative of the correlator as `Corr`. Different discretizations of the numerical derivative are available. 269- `Corr.second_deriv` returns the second derivative of the correlator as `Corr`. Different discretizations of the numerical derivative are available. 270- `Corr.symmetric` symmetrizes parity even correlations functions, assuming periodic boundary conditions. 271- `Corr.anti_symmetric` anti-symmetrizes parity odd correlations functions, assuming periodic boundary conditions. 272- `Corr.T_symmetry` averages a correlator with its time symmetry partner, assuming fixed boundary conditions. 273- `Corr.plateau` extracts a plateau value from the correlator in a given range. 274- `Corr.roll` periodically shifts the correlator. 275- `Corr.reverse` reverses the time ordering of the correlator. 276- `Corr.correlate` constructs a disconnected correlation function from the correlator and another `Corr` or `Obs` object. 277- `Corr.reweight` reweights the correlator. 278 279`pyerrors` can also handle matrices of correlation functions and extract energy states from these matrices via a generalized eigenvalue problem (see `pyerrors.correlators.Corr.GEVP`). 280 281For the full API see `pyerrors.correlators.Corr`. 282 283# Complex valued observables 284 285`pyerrors` can handle complex valued observables via the class `pyerrors.obs.CObs`. 286`CObs` are initialized with a real and an imaginary part which both can be `Obs` valued. 287 288```python 289my_real_part = pe.Obs([samples1], ['ensemble1']) 290my_imag_part = pe.Obs([samples2], ['ensemble1']) 291 292my_cobs = pe.CObs(my_real_part, my_imag_part) 293my_cobs.gamma_method() 294print(my_cobs) 295> (0.9959(91)+0.659(28)j) 296``` 297 298Elementary mathematical operations are overloaded and samples are properly propagated as for the `Obs` class. 299```python 300my_derived_cobs = (my_cobs + my_cobs.conjugate()) / np.abs(my_cobs) 301my_derived_cobs.gamma_method() 302print(my_derived_cobs) 303> (1.668(23)+0.0j) 304``` 305 306# The `Covobs` class 307In many projects, auxiliary data that is not based on Monte Carlo chains enters. Examples are experimentally determined mesons masses which are used to set the scale or renormalization constants. These numbers come with an error that has to be propagated through the analysis. The `Covobs` class allows to define such quantities in `pyerrors`. Furthermore, external input might consist of correlated quantities. An example are the parameters of an interpolation formula, which are defined via mean values and a covariance matrix between all parameters. The contribution of the interpolation formula to the error of a derived quantity therefore might depend on the complete covariance matrix. 308 309This concept is built into the definition of `Covobs`. In `pyerrors`, external input is defined by $M$ mean values, a $M\times M$ covariance matrix, where $M=1$ is permissible, and a name that uniquely identifies the covariance matrix. Below, we define the pion mass, based on its mean value and error, 134.9768(5). **Note, that the square of the error enters `cov_Obs`**, since the second argument of this function is the covariance matrix of the `Covobs`. 310 311```python 312import pyerrors.obs as pe 313 314mpi = pe.cov_Obs(134.9768, 0.0005**2, 'pi^0 mass') 315mpi.gamma_method() 316mpi.details() 317> Result 1.34976800e+02 +/- 5.00000000e-04 +/- 0.00000000e+00 (0.000%) 318> pi^0 mass 5.00000000e-04 319> 0 samples in 1 ensemble: 320> · Covobs 'pi^0 mass' 321``` 322The resulting object `mpi` is an `Obs` that contains a `Covobs`. In the following, it may be handled as any other `Obs`. The contribution of the covariance matrix to the error of an `Obs` is determined from the $M \times M$ covariance matrix $\Sigma$ and the gradient of the `Obs` with respect to the external quantities, which is the $1\times M$ Jacobian matrix $J$, via 323$$s = \sqrt{J^T \Sigma J}\,,$$ 324where the Jacobian is computed for each derived quantity via automatic differentiation. 325 326Correlated auxiliary data is defined similarly to above, e.g., via 327```python 328RAP = pe.cov_Obs([16.7457, -19.0475], [[3.49591, -6.07560], [-6.07560, 10.5834]], 'R_AP, 1906.03445, (5.3a)') 329print(RAP) 330> [Obs[16.7(1.9)], Obs[-19.0(3.3)]] 331``` 332where `RAP` now is a list of two `Obs` that contains the two correlated parameters. 333 334Since the gradient of a derived observable with respect to an external covariance matrix is propagated through the entire analysis, the `Covobs` class allows to quote the derivative of a result with respect to the external quantities. If these derivatives are published together with the result, small shifts in the definition of external quantities, e.g., the definition of the physical point, can be performed a posteriori based on the published information. This may help to compare results of different groups. The gradient of an `Obs` `o` with respect to a covariance matrix with the identifying string `k` may be accessed via 335```python 336o.covobs[k].grad 337``` 338 339# Error propagation in iterative algorithms 340 341`pyerrors` supports exact linear error propagation for iterative algorithms like various variants of non-linear least squares fits or root finding. The derivatives required for the error propagation are calculated as described in [arXiv:1809.01289](https://arxiv.org/abs/1809.01289). 342 343## Least squares fits 344 345Standard non-linear least square fits with errors on the dependent but not the independent variables can be performed with `pyerrors.fits.least_squares`. As default solver the Levenberg-Marquardt algorithm implemented in [scipy](https://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.least_squares.html) is used. 346 347Fit functions have to be of the following form 348```python 349import autograd.numpy as anp 350 351def func(a, x): 352 return a[1] * anp.exp(-a[0] * x) 353``` 354**It is important that numerical functions refer to `autograd.numpy` instead of `numpy` for the automatic differentiation in iterative algorithms to work properly.** 355 356Fits can then be performed via 357```python 358fit_result = pe.fits.least_squares(x, y, func) 359print("\n", fit_result) 360> Fit with 2 parameters 361> Method: Levenberg-Marquardt 362> `ftol` termination condition is satisfied. 363> chisquare/d.o.f.: 0.9593035785160936 364 365> Goodness of fit: 366> χ²/d.o.f. = 0.959304 367> p-value = 0.5673 368> Fit parameters: 369> 0 0.0548(28) 370> 1 1.933(64) 371``` 372where x is a `list` or `numpy.array` of `floats` and y is a `list` or `numpy.array` of `Obs`. 373 374Data stored in `Corr` objects can be fitted directly using the `Corr.fit` method. 375```python 376my_corr = pe.Corr(y) 377fit_result = my_corr.fit(func, fitrange=[12, 25]) 378``` 379this can simplify working with absolute fit ranges and takes care of gaps in the data automatically. 380 381For fit functions with multiple independent variables the fit function can be of the form 382 383```python 384def func(a, x): 385 (x1, x2) = x 386 return a[0] * x1 ** 2 + a[1] * x2 387``` 388 389`pyerrors` also supports correlated fits which can be triggered via the parameter `correlated_fit=True`. 390Details about how the required covariance matrix is estimated can be found in `pyerrors.obs.covariance`. 391Direct visualizations of the performed fits can be triggered via `resplot=True` or `qqplot=True`. 392 393For all available options including combined fits to multiple datasets see `pyerrors.fits.least_squares`. 394 395## Total least squares fits 396`pyerrors` can also fit data with errors on both the dependent and independent variables using the total least squares method also referred to as orthogonal distance regression as implemented in [scipy](https://docs.scipy.org/doc/scipy/reference/odr.html), see `pyerrors.fits.least_squares`. The syntax is identical to the standard least squares case, the only difference being that `x` also has to be a `list` or `numpy.array` of `Obs`. 397 398For the full API see `pyerrors.fits` for fits and `pyerrors.roots` for finding roots of functions. 399 400# Matrix operations 401`pyerrors` provides wrappers for `Obs`- and `CObs`-valued matrix operations based on `numpy.linalg`. The supported functions include: 402- `inv` for the matrix inverse. 403- `cholseky` for the Cholesky decomposition. 404- `det` for the matrix determinant. 405- `eigh` for eigenvalues and eigenvectors of hermitean matrices. 406- `eig` for eigenvalues of general matrices. 407- `pinv` for the Moore-Penrose pseudoinverse. 408- `svd` for the singular-value-decomposition. 409 410For the full API see `pyerrors.linalg`. 411 412# Export data 413 414[<img src="https://imgs.xkcd.com/comics/standards_2x.png" width="75%" height="75%">](https://xkcd.com/927/) 415 416The preferred exported file format within `pyerrors` is json.gz. Files written to this format are valid JSON files that have been compressed using gzip. The structure of the content is inspired by the dobs format of the ALPHA collaboration. The aim of the format is to facilitate the storage of data in a self-contained way such that, even years after the creation of the file, it is possible to extract all necessary information: 417- What observables are stored? Possibly: How exactly are they defined. 418- How does each single ensemble or external quantity contribute to the error of the observable? 419- Who did write the file when and on which machine? 420 421This can be achieved by storing all information in one single file. The export routines of `pyerrors` are written such that as much information as possible is written automatically as described in the following example 422```python 423my_obs = pe.Obs([samples], ["test_ensemble"]) 424my_obs.tag = "My observable" 425 426pe.input.json.dump_to_json(my_obs, "test_output_file", description="This file contains a test observable") 427# For a single observable one can equivalently use the class method dump 428my_obs.dump("test_output_file", description="This file contains a test observable") 429 430check = pe.input.json.load_json("test_output_file") 431 432print(my_obs == check) 433> True 434``` 435The format also allows to directly write out the content of `Corr` objects or lists and arrays of `Obs` objects by passing the desired data to `pyerrors.input.json.dump_to_json`. 436 437## json.gz format specification 438The first entries of the file provide optional auxiliary information: 439- `program` is a string that indicates which program was used to write the file. 440- `version` is a string that specifies the version of the format. 441- `who` is a string that specifies the user name of the creator of the file. 442- `date` is a string and contains the creation date of the file. 443- `host` is a string and contains the hostname of the machine where the file has been written. 444- `description` contains information on the content of the file. This field is not filled automatically in `pyerrors`. The user is advised to provide as detailed information as possible in this field. Examples are: Input files of measurements or simulations, LaTeX formulae or references to publications to specify how the observables have been computed, details on the analysis strategy, ... This field may be any valid JSON type. Strings, arrays or objects (equivalent to dicts in python) are well suited to provide information. 445 446The only necessary entry of the file is the field 447-`obsdata`, an array that contains the actual data. 448 449Each entry of the array belongs to a single structure of observables. Currently, these structures can be either of `Obs`, `list`, `numpy.ndarray`, `Corr`. All `Obs` inside a structure (with dimension > 0) have to be defined on the same set of configurations. Different structures, that are represented by entries of the array `obsdata`, are treated independently. Each entry of the array `obsdata` has the following required entries: 450- `type` is a string that specifies the type of the structure. This allows to parse the content to the correct form after reading the file. It is always possible to interpret the content as list of Obs. 451- `value` is an array that contains the mean values of the Obs inside the structure. 452The following entries are optional: 453- `layout` is a string that specifies the layout of multi-dimensional structures. Examples are "2, 2" for a 2x2 dimensional matrix or "64, 4, 4" for a Corr with $T=64$ and 4x4 matrices on each time slices. "1" denotes a single Obs. Multi-dimensional structures are stored in row-major format (see below). 454- `tag` is any JSON type. It contains additional information concerning the structure. The `tag` of an `Obs` in `pyerrors` is written here. 455- `reweighted` is a Bool that may be used to specify, whether the `Obs` in the structure have been reweighted. 456- `data` is an array that contains the data from MC chains. We will define it below. 457- `cdata` is an array that contains the data from external quantities with an error (`Covobs` in `pyerrors`). We will define it below. 458 459The array `data` contains the data from MC chains. Each entry of the array corresponds to one ensemble and contains: 460- `id`, a string that contains the name of the ensemble 461- `replica`, an array that contains an entry per replica of the ensemble. 462 463Each entry of `replica` contains 464`name`, a string that contains the name of the replica 465`deltas`, an array that contains the actual data. 466 467Each entry in `deltas` corresponds to one configuration of the replica and has $1+N$ many entries. The first entry is an integer that specifies the configuration number that, together with ensemble and replica name, may be used to uniquely identify the configuration on which the data has been obtained. The following N entries specify the deltas, i.e., the deviation of the observable from the mean value on this configuration, of each `Obs` inside the structure. Multi-dimensional structures are stored in a row-major format. For primary observables, such as correlation functions, $value + delta_i$ matches the primary data obtained on the configuration. 468 469The array `cdata` contains information about the contribution of auxiliary observables, represented by `Covobs` in `pyerrors`, to the total error of the observables. Each entry of the array belongs to one auxiliary covariance matrix and contains: 470- `id`, a string that identifies the covariance matrix 471- `layout`, a string that defines the dimensions of the $M\times M$ covariance matrix (has to be "M, M" or "1"). 472- `cov`, an array that contains the $M\times M$ many entries of the covariance matrix, stored in row-major format. 473- `grad`, an array that contains N entries, one for each `Obs` inside the structure. Each entry itself is an array, that contains the M gradients of the Nth observable with respect to the quantity that corresponds to the Mth diagonal entry of the covariance matrix. 474 475A JSON schema that may be used to verify the correctness of a file with respect to the format definition is stored in ./examples/json_schema.json. The schema is a self-descriptive format definition and contains an exemplary file. 476 477Julia I/O routines for the json.gz format, compatible with [ADerrors.jl](https://gitlab.ift.uam-csic.es/alberto/aderrors.jl), can be found [here](https://github.com/fjosw/ADjson.jl). 478''' 479from .obs import * 480from .correlators import * 481from .fits import * 482from .misc import * 483from . import dirac 484from . import input 485from . import linalg 486from . import mpm 487from . import roots 488 489from .version import __version__